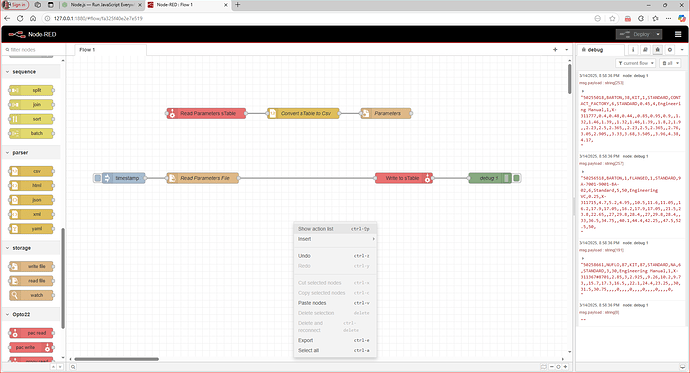

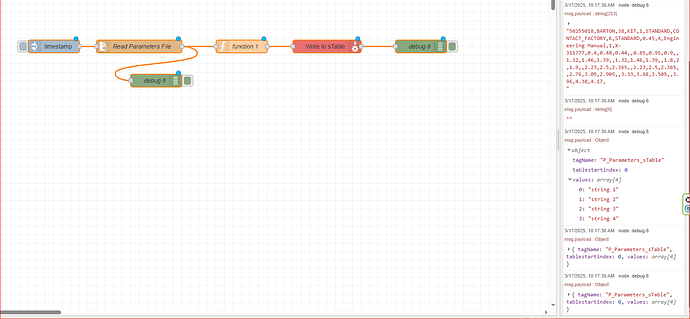

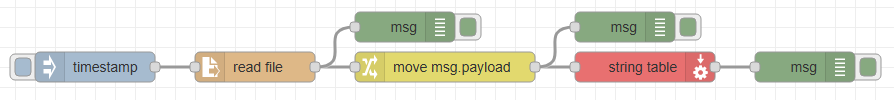

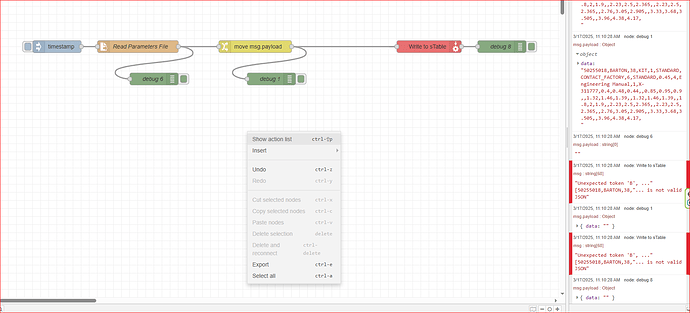

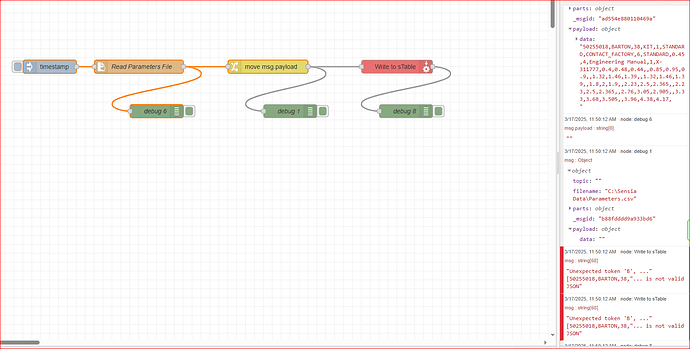

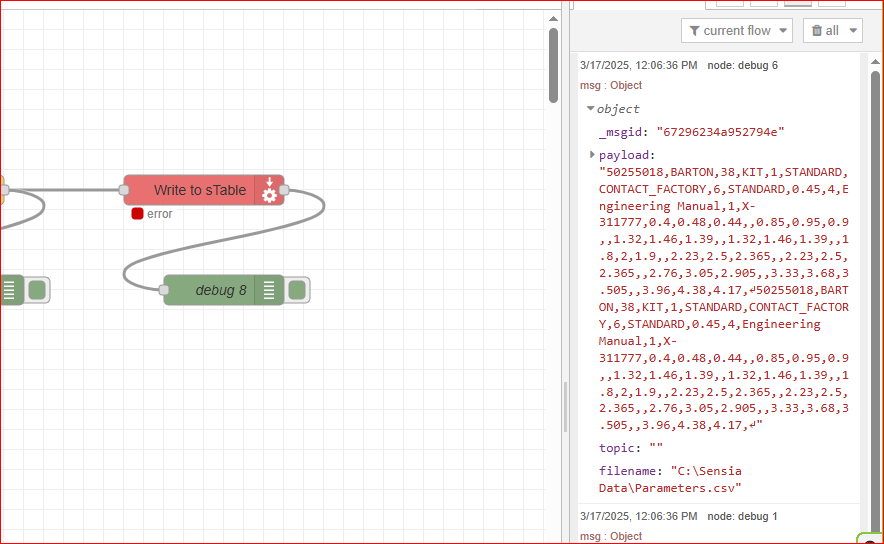

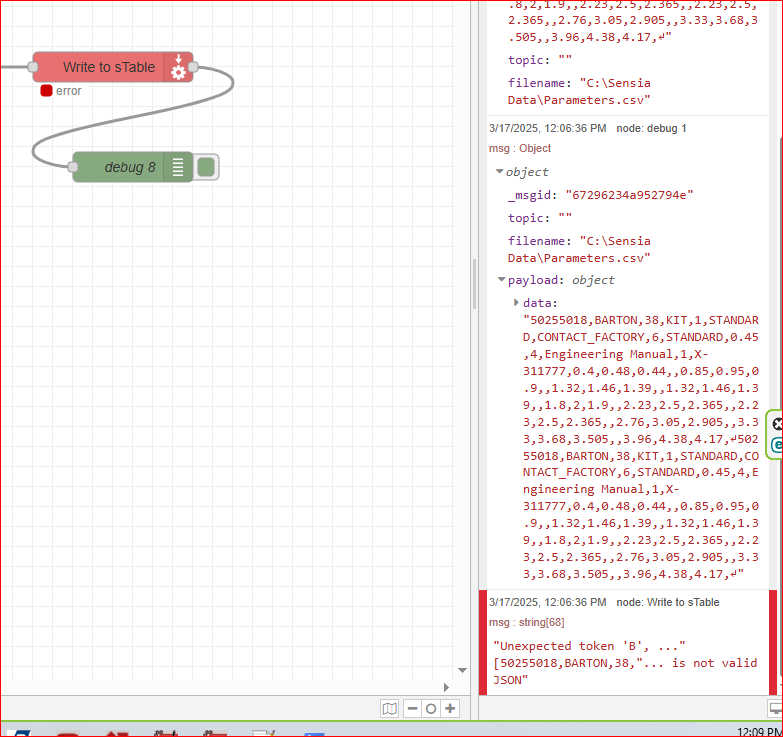

So I got to here with:

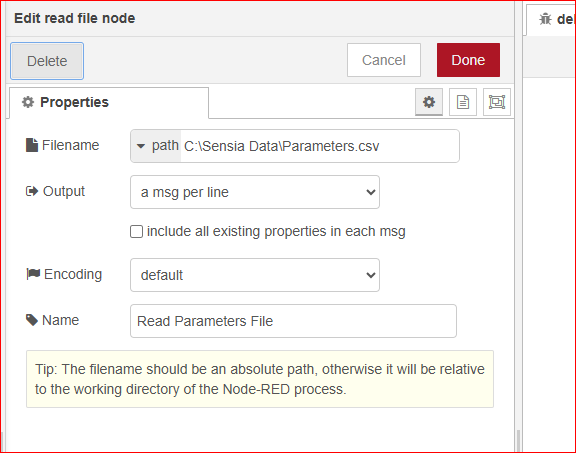

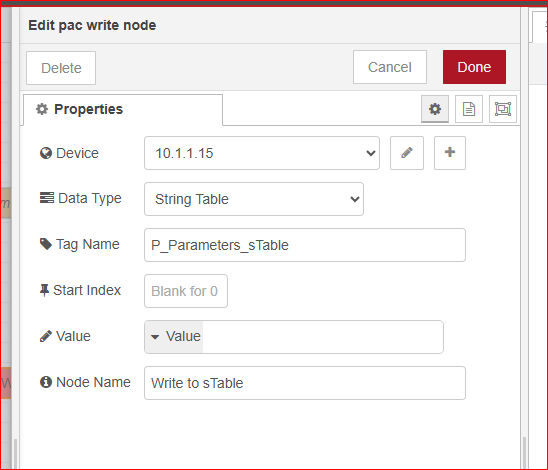

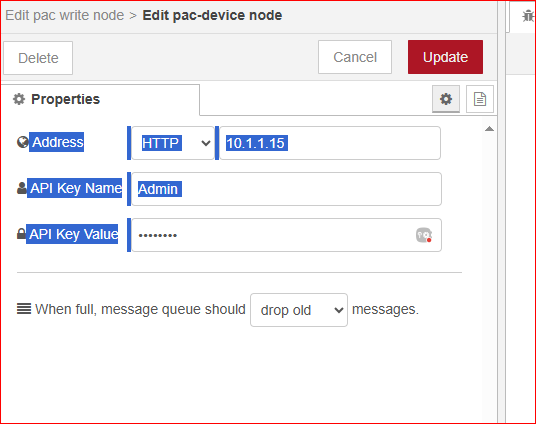

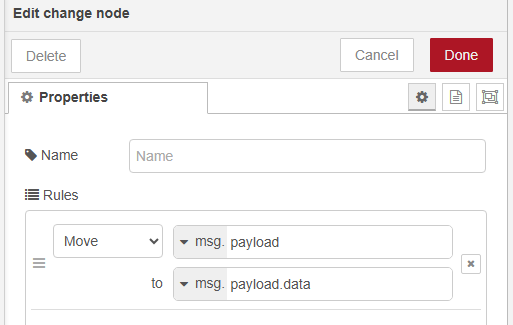

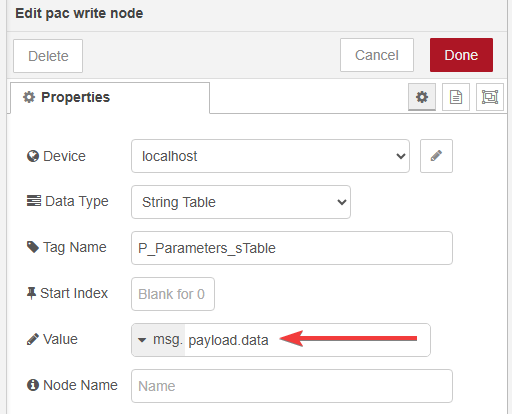

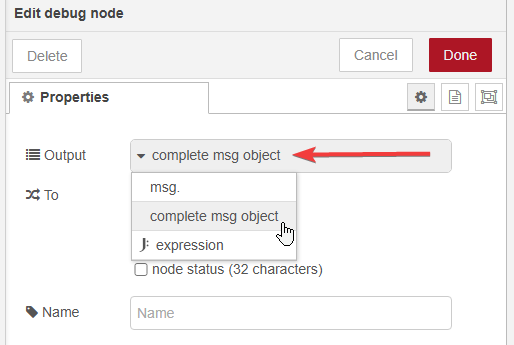

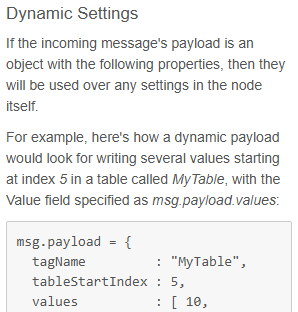

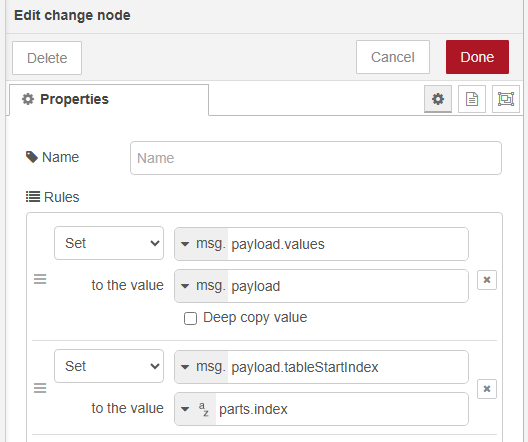

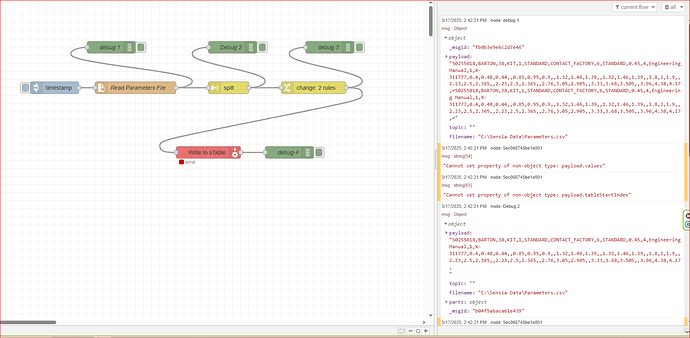

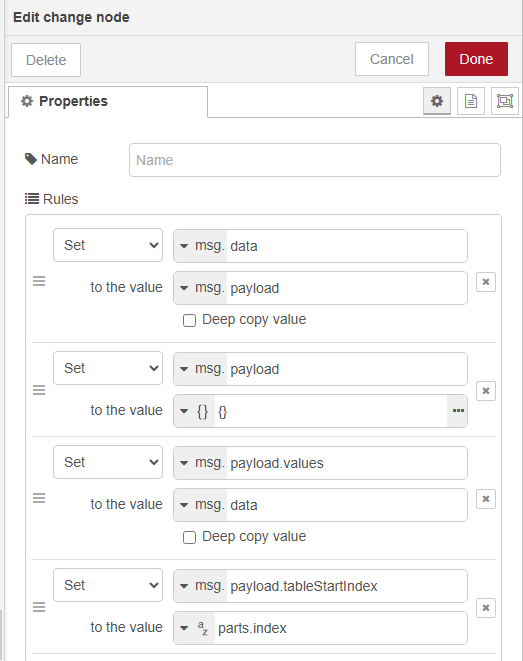

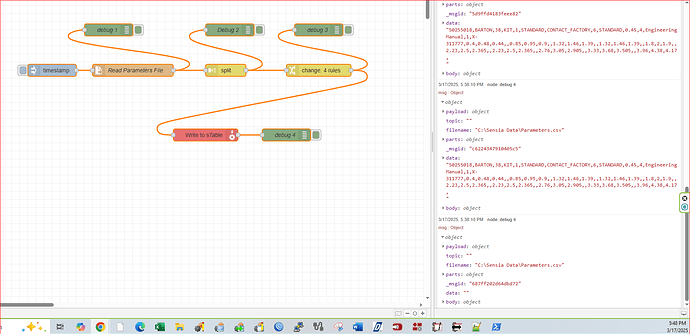

[{“id”:“0eef93d3778166a3”,“type”:“pac-write”,“z”:“fa325f40e2e7e519”,“device”:“cd198602403d769b”,“dataType”:“string-table”,“tagName”:“P_Parameters_sTable”,“tableStartIndex”:“”,“value”:“”,“valueType”:“value”,“name”:“Write to sTable”,“x”:580,“y”:380,“wires”:[[“2ebf8c6a8b7fdba2”]]},{“id”:“bee10f1ff147ae74”,“type”:“inject”,“z”:“fa325f40e2e7e519”,“name”:“”,“props”:[{“p”:“payload”},{“p”:“topic”,“vt”:“str”}],“repeat”:“”,“crontab”:“”,“once”:false,“onceDelay”:0.1,“topic”:“”,“payload”:“”,“payloadType”:“date”,“x”:200,“y”:220,“wires”:[[“09e8e5e3b4cd082a”]]},{“id”:“28144f3a443d0343”,“type”:“debug”,“z”:“fa325f40e2e7e519”,“name”:“debug 1”,“active”:true,“tosidebar”:true,“console”:false,“tostatus”:false,“complete”:“true”,“targetType”:“full”,“statusVal”:“”,“statusType”:“auto”,“x”:340,“y”:120,“wires”:},{“id”:“2ebf8c6a8b7fdba2”,“type”:“debug”,“z”:“fa325f40e2e7e519”,“name”:“debug 4”,“active”:true,“tosidebar”:true,“console”:false,“tostatus”:false,“complete”:“true”,“targetType”:“full”,“statusVal”:“”,“statusType”:“auto”,“x”:780,“y”:380,“wires”:},{“id”:“8f082f7bc82d7153”,“type”:“debug”,“z”:“fa325f40e2e7e519”,“name”:“debug 3”,“active”:true,“tosidebar”:true,“console”:false,“tostatus”:false,“complete”:“true”,“targetType”:“full”,“statusVal”:“”,“statusType”:“auto”,“x”:860,“y”:120,“wires”:},{“id”:“82063e23bc14830d”,“type”:“split”,“z”:“fa325f40e2e7e519”,“name”:“”,“splt”:“\n”,“spltType”:“str”,“arraySplt”:1,“arraySpltType”:“len”,“stream”:false,“addname”:“”,“property”:“payload”,“x”:630,“y”:220,“wires”:[[“573245e292ce71ae”,“e260c8b67b8e9335”]]},{“id”:“09e8e5e3b4cd082a”,“type”:“file in”,“z”:“fa325f40e2e7e519”,“name”:“Read Parameters File”,“filename”:“C:\Sensia Data\Parameters.csv”,“filenameType”:“str”,“format”:“utf8”,“chunk”:false,“sendError”:false,“encoding”:“utf8”,“allProps”:false,“x”:400,“y”:220,“wires”:[[“28144f3a443d0343”,“82063e23bc14830d”]]},{“id”:“573245e292ce71ae”,“type”:“debug”,“z”:“fa325f40e2e7e519”,“name”:“Debug 2”,“active”:true,“tosidebar”:true,“console”:false,“tostatus”:false,“complete”:“true”,“targetType”:“full”,“statusVal”:“”,“statusType”:“auto”,“x”:640,“y”:120,“wires”:},{“id”:“e260c8b67b8e9335”,“type”:“change”,“z”:“fa325f40e2e7e519”,“name”:“”,“rules”:[{“t”:“set”,“p”:“data”,“pt”:“msg”,“to”:“payload”,“tot”:“msg”},{“t”:“set”,“p”:“payload”,“pt”:“msg”,“to”:“{}”,“tot”:“json”},{“t”:“set”,“p”:“payload.values”,“pt”:“msg”,“to”:“data”,“tot”:“msg”},{“t”:“set”,“p”:“payload.tableStartIndex”,“pt”:“msg”,“to”:“parts.index”,“tot”:“str”}],“action”:“”,“property”:“”,“from”:“”,“to”:“”,“reg”:false,“x”:860,“y”:220,“wires”:[[“8f082f7bc82d7153”,“0eef93d3778166a3”]]},{“id”:“cd198602403d769b”,“type”:“pac-device”,“address”:“10.1.1.15:80”,“protocol”:“http”,“msgQueueFullBehavior”:“REJECT_NEW”}]