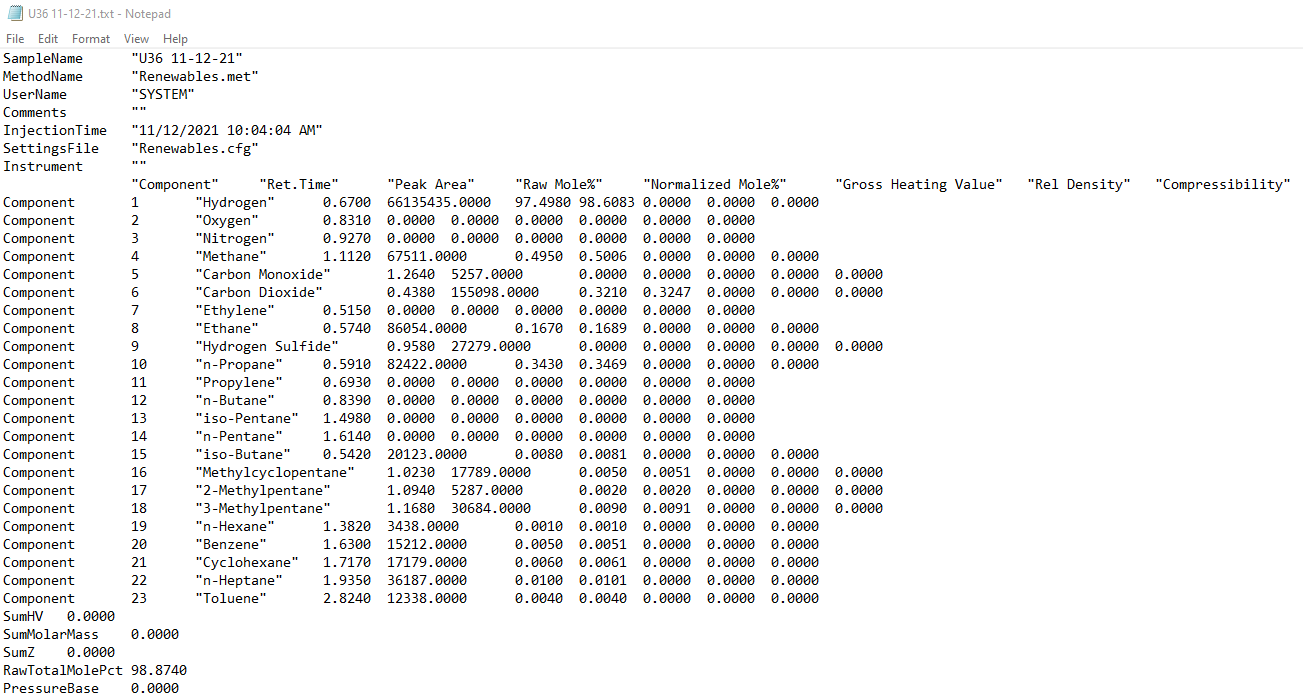

Yes, having the file as a CSV for starters would be amazing, but… it depends on if that can be automated or not. If not, then we can probably deal with it as it is (but for sure CSV will be a LOT better as you will see).

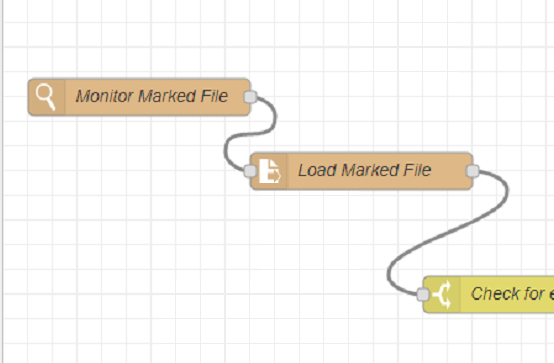

So, here is how I would attack it… Node-RED has a file in node. It can read that file directly from the PC hard drive. It would need to be fired by some sort of inject node, either a timer, or a cron schedule etc.

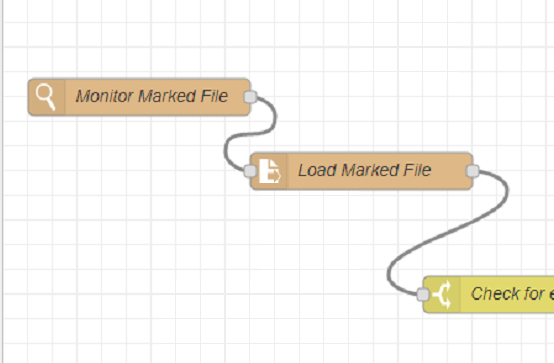

There is another node, a file watch node that you can add to the project to check when that file is created or updated, so that takes care of that part of it.

In this flow, I am using both. I watch for the marked file, then I read the file when its been updated.

Next, we need to parse the file.

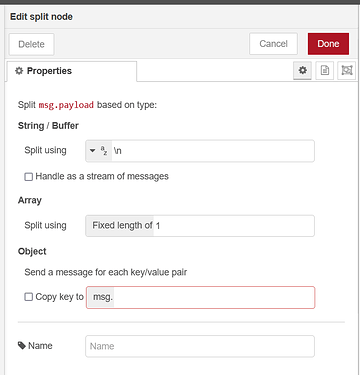

For the current text file, I would probably use the split node.

As it is, I would try splitting on a " " (a space, or perhaps get each line by splitting on \n\r), so remove the \n and put a space in there.

Tempted to try also splitting on " since a lot of your characters are delimited by that.

While not every field in your original text file has that, its not a huge problem because Node-RED is great a getting sub-string, so sure the " words would have extra, but we can pull the fluff out as needed.

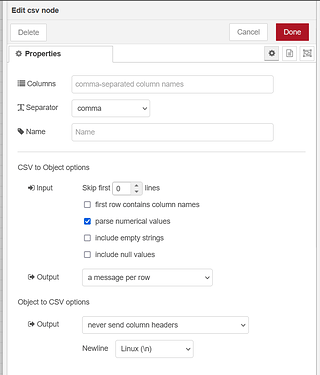

Now, if you can do a CSV, then I would use the CSV to JSON node…

With this node you get to name the JSON keys in the top line so you can address it exactly as key:value pairs latter in the flow.

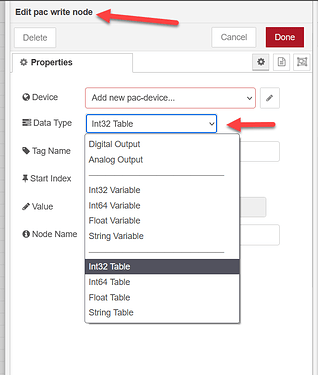

Once you have extracted your gas name and raw mole, you can then send it to the controller using the PAC Nodes… You want the PAC write node:

I would probably just deal with them as vars, but you can see that if you want to build a table and send them that way as well.

Note, you need to be using 9.x firmware to use these nodes.

Hope that gives some ideas.

I’m more than sure there are other ways to do the same thing, but yeah, text file reading is very hard with a PAC and while you could parse the raw string, it would need to be sliced up a lot due to PACs limit of 1024 characters…