I am using Node-RED to read a string of data from a serial device and write the data into a .csv file that can be read by a specific method in a script in Ignition Edge. I won’t go into detail about the Ignition side of things, but basically, the .csv file needs to be formatted exactly as follows (without the comments I’ve added for this post):

"#NAMES"

"Cycle number","Date","Time","Energy" //name of each column

"#TYPES"

"I","str","str", "I" //alias for javascript data class of each column

"#ROWS","4" //number of rows (must match actual number of rows)

"7621","01/31/25","10:47:54 AM","775"

"7622","01/31/25","10:49:07 AM","740"

"7623","01/31/25","10:49:58 AM","760"

"7624","01/31/25","10:50:57 AM","785"

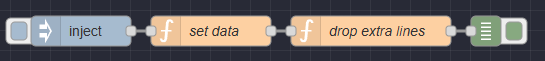

I want this .csv file to always contain data from the 3000 most recent cycles of the serial device (the device outputs 1 string per cycle). I am able to use a function node to take the string and format it into the required format for each data row:

7631 01/31/25 11:03:46 AM 765 //raw string from device

"7631","01/31/25","11:03:46 AM","765" //formatted for .csv file

But to keep only the 3000 most recent entries, on each cycle I need to append the new line of data to the last line of the .csv file, and “delete” line 6 of the .csv file (the oldest line of data).

A “nice to have” would be to update the number of rows in line 5 of the .csv file until the number of rows of data reaches 3000 (and until this point is reached, not delete the oldest data from line 6). But I could also just have dummy data in there to start. The machine will reach 3000 cycles pretty quickly.

The method I’m using in Ignition is pretty inflexible so I’m not able to only read certain lines of the .csv file there. I also can’t have this dataset become infinitely large in Ignition.

Is this possible to perform within a function node?