I am currently running a node red program on a groov EPIC that gathers about 500,000 values from field devices 1 time per day and writes them to a SQL server. The program has a memory leak or some problem which causes it to eat up memory even after the flow is finished running. I will start at about 80Mb of memory used, and when the flow is done, will end somewhere around 200Mb used. I am using the node-red-contrib-mssql-plus 0.4.4 version to log the data and HTTP nodes to get it from the devices. Is there a known issue with a memory leak with the mssql-plus node?

What version of groov EPIC firmware are you using? I did find this KB article that describes something similar in version 1.5 - Opto22 - KB88892

One other question. Are you doing this all in a single node?

I was using System Version 2.0.2-b.139 for the EPIC, Node-RED version is 1.0.3, and Node.js version is v10.16.3

No, it is multiple nodes. I have a few HTTP nodes, 2 MSSQL nodes, and a few general nodes like function and template nodes.

Hi Timothy.

I don’t think you are seeing a memory leak (but you could always reach out to the node developer on his github via an issue and double check).

I think what you are seeing is normal to the underlying code of Node-RED. That is Node.js.

Pretty much all the nodes use memory to buffer their data.

This topic has seen much discussion on the Node-RED forums;

Here is another general question;

And this user sees the same issue with the FTP node;

I took a quick look via shell and I believe that Opto leaves the default --max-old-space=256 setting in place. So Node.js will do its garbage collection roughly around that value. (That value is not set in stone by Node.js).

Bottom line, 500,000 records is using the memory and since there is no buffering or rate limit, it floods in rather quickly and the garbage collection service is not cleaning it up since its probably just at the threshold of when it will cut in, so it just leaves it hanging even after that part of the flow has done its job.

For what its worth, I run Node-RED on a Windows PC (8 core 3.7ghz with 32gig of RAM) for a personal project I tinker with.

About 3 months ago, I used Node-RED to format and insert 442,209 records into a MySQL DB running on my Linux box on my local network, when I set the flow in motion, it ran for about 5 minutes, flooded my network and then crashed Node-RED before all the records were inserted. My work around was to break the insert statement up into 3 chunks. Everything ran smoothly doing it that way.

My point is, what you are seeing with the MSSQL node sounds a lot like what I saw with the MySQL node.

If the memory use is a concern, you might like to implement one or two tweaks to your flow.

- Rate limit the collection / insertion of the records. (Be careful how you do this as the delay Node simply just buffers the stream in memory for example).

- Break up the collection / insertion of the records into smaller bite sized chunks.

- If you have a shell license (if you are comfortable with Linux command line and scripting/programming) you might consider running your collection / DB insert via something like a Python script. Note, I don’t know what Python libraries are needed to connect to a MSSQL db, I just use Python as an example.

I am interested in what you find and if you decide to change your flow at all, lets know how you get on.

Thanks Beno,

My flow will run through completely about 3 times before node red crashes from being out of memory. I am using a rate limiter to only have 1 of the 70 devices being queried and logged at a time, so only about 4000 values are going through the flow at a time, and i was breaking the sql query into chunks of about 1000 records at a time since that is the limit for a MSSql queries.

I am trying something mentioned in your attached links about clearing messages out after they are finished to see if that makes a difference. Past that, I may download one of the memory nodes to see if I can track down where the memory is getting used. I am not great with linux command line or Python, so I would like to avoid them.

I am not sure how you are doing the rate limiting, but as I said, that can sometimes just buffer data in memory just the same.

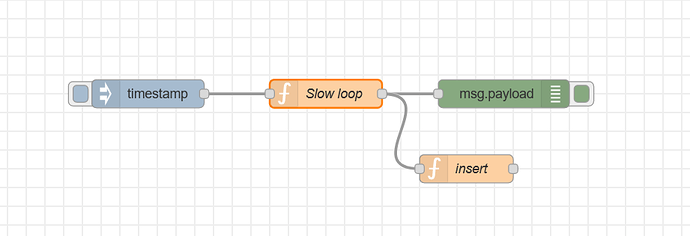

Here is a slow loop that @torchard put together for another reason that I leveraged for the MySQL insert.

This is what the the flow looks like.

You will need to tweak it to suit your flow, but it will ‘slowly’ update what ever you point it at.

Here is the flow to import.

[{"id":"2dc27b60.e8473c","type":"function","z":"b42e0f8.222b5f","name":"Slow loop","func":"function sleep(ms) {\n return new Promise(resolve => setTimeout(resolve, ms));\n}\n// set the number of times you want to send the payload.\n// so 0 to 9999 for 1000 records for example.\n\nasync function demo() {\n for (i = 1; i < 16; i++) {\n msg.payload = i;\n node.send(msg);\n await sleep(500); //set the time between injects (in miliseconds)\n }\n}\ndemo();\n//return { payload : \"end of line\" };\nreturn { payload};","outputs":1,"noerr":0,"initialize":"","finalize":"","x":1260,"y":500,"wires":[["94aa5870.4b4c7","5ffe5ea4.b90598"]]},{"id":"71a07e7f.2d6048","type":"inject","z":"b42e0f8.222b5f","name":"","repeat":"","crontab":"","once":false,"onceDelay":0.1,"topic":"","payload":"","payloadType":"date","x":1070,"y":500,"wires":[["2dc27b60.e8473c"]]},{"id":"94aa5870.4b4c7","type":"debug","z":"b42e0f8.222b5f","name":"","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"false","x":1450,"y":500,"wires":[]},{"id":"5ffe5ea4.b90598","type":"function","z":"b42e0f8.222b5f","name":"insert","func":"msg.topic = \"INSERT INTO aircraft (airframe) VALUES('\"+msg.payload+\"')\";\n\nreturn msg;","outputs":1,"noerr":0,"initialize":"","finalize":"","x":1410,"y":580,"wires":[[]]}]Beno,

I went onto the node developer’s github page and posted the issue with them. We tried the rate limiting, but it didn’t help. He did post this though that looks interesting. He is saying that other people have had issues using node.js standard library promises with Mysql nodes.

Do you think this could be the issue? The general link for my issue is here.

Just a quick reply to let you know that both Terry and I have seen your reply and are digging into it.