We use a groov epic to manage two snap pac R1 ( 1 for digital IO and 1 for analog IO).

In the strategy, we have a chart to recopy all IO from our snap pac R1 with the function “move I/O unit to numeric table ex” and we call it every 100ms.

Everything works well, but sometimes, an IO error ( id -539 ) occured during the function. The error appears on the digital unit or analog.

We upgraded snap pac R1(10.0f) and groov(1.4.3-b130) with the last release without results.

Are the R1 controllers also running their own strategy?

What else is on the network other than the three devices?

How many modules on each rack?

No strategy run on the R1 controllers, I use them as head of station (acquisition unit without any strategy). On the network, we have 4 PC, 1 groov, 2 R1 controllers, and 2 Siemens S7-1200.

On first R1 controllers (DIGITAL), we have 8 modules HDD 32 DI (SNAP-IDC-32) and 5 modules HDD 32 DO (SNAP-ODC-32-SRC) and on the second R1 controllers (Analog), we have 5 modules HDD 32 AI (SNAP-AIV-32) and 5 modules AOV-28 2 AO.

The problem appeared when we have replaced a SNAP PAC S1 controllers with a Groov EPIC PR1.

Thank you for your help.

The PR1 runs significantly faster than the S1, so I suspect that is the core issue. You are now over polling the R1 controllers where as with the S1 things were a little more sedate.

Lets look at some options.

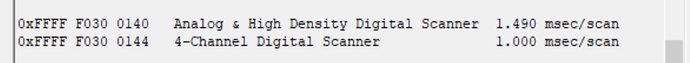

- The digital R1. Both those modules have a data freshness of around 30mSec, so polling them at 100mSec is Ok. I would be turning off the control engine on that R1 and since both modules are high density digital, they use the analog scanner, so I would also be turning off the digital scanner. In effect you will be using the R1 controller as a high speed analog brain. Exactly what you need.

The challenge for this rack is the shear number of modules (and thus points you are reading).

Take a look at PAC Manager inspect tool and see what its analog scanner is reporting. I would do this both before and after you turn off the control engine and digital scanner and see how much difference it makes.

- The analog R1. The SNAP-AIV-32 is a data freshness rate of 1100mSec, so polling it at 100mSec is massive overkill. However, with the analog outputs on the rack, you may need to write to them that fast. You never mentioned writing, only reading.

That said, same thing goes. Turn off the control engine and turn off the digital scanner and turn the controller into a high speed analog brain. - In the PR1 control strategy, be sure and have a delay between polling each brain. You don’t want to be banging away at both of them at the exact same time. Not sure if you have different charts for each rack or not.

The S1 would use a ‘round robin’ scanner since it it was a single core CPU, the PR1 is a quad core and is multi-threaded. So you can have a chart per core, depending on how you have your delays and timing set up, you might be hitting things quite a bit harder than you think. - Why the ‘recopy’ command at all? The two R1 controllers can simply be I/O on the EPIC. No need for moving I/O at all, each point can be addressed as needed from the strategy on the EPIC, or am I missing something?

(See page 152 of your PAC Manages users guide (Doc 1704) for how change the scanner flags if you need some help in that regard.)

I’d also like to get @philip and others input on this one… It’s an interesting scenario.

Simon, I think you should focus on Beno’s #4, I have similar setups that we upgraded from significantly slower controllers to PR1 with several i/o and modbus data polling’s and we had no issues whatsoever. This seems to be a chart running way too fast and chocking up the table, move i/o values to variables and use delays, you should have no issues.

If the polling is every 100ms, I don’t see why the speed of the PR1 would change anything. The R1 should see the same request at the same interval as it did when running on an S1.

How often are the -539 errors happening?

I’m thinking this may be network related and a packet is getting lost occasionally. Maybe the PR1 is a bit more sensitive at detecting this? If this is affecting your process (as the move IO unit command blocks until complete or timeout, default of 5 seconds), then you could decrease the timeout interval for your IO units to a lower value so the timeout and retry will happen faster. If the typical response time on your network is fast, then maybe setting the timeout to a low value like 50 ms would help out (Doesn’t fix the error though).