I can’t make any guarantees about how well the timestamps will line up if you do this, but this may work for you.

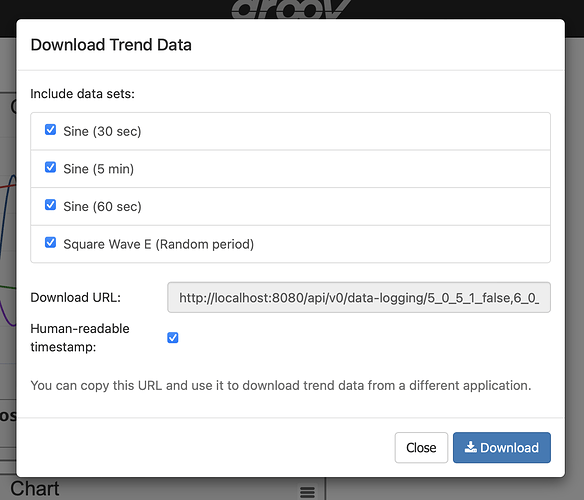

For data set you want to download, copy the download link into a plain text file. For example, looking at this download dialog:

Copy the entire URL that’s in that “Download URL” field. In my case, it looks like this:

http://localhost:8080/api/v0/data-logging/5_0_5_1_false,6_0_5_1_false,7_0_5_1_false,8_0_5_1_false/scanned-data.csv?apiKey=NdNy2GDMVBMyWcC4JsULjpJ63sr9fYWB&humans=true

Do that for each trend that you want to download data for. They’ll all need to be configured for the same period and scan interval or you’re going to get weird data out. Once you have all of your URLs, like this:

http://localhost:8080/api/v0/data-logging/5_0_5_1_false,6_0_5_1_false,7_0_5_1_false,8_0_5_1_false/scanned-data.csv?apiKey=NdNy2GDMVBMyWcC4JsULjpJ63sr9fYWB&humans=true

http://localhost:8080/api/v0/data-logging/1_0_5_1_false,2_0_5_1_false,3_0_5_1_false,4_0_5_1_false/scanned-data.csv?apiKey=NdNy2GDMVBMyWcC4JsULjpJ63sr9fYWB&humans=true

Look at the part between data-logging and scanned-data.csv; those are the identifiers to tell it which data set to download. (For the curious, the identifiers are <tag-id>_<array-index>_<trend-period>_<scan-interval>_<interactive>, but that’s not super important.) We want to combine all of the identifiers you want to download into a single URL, separated by commas. The identifiers in my URLs above are:

1_0_5_1_false

2_0_5_1_false

3_0_5_1_false

4_0_5_1_false

5_0_5_1_false

6_0_5_1_false

7_0_5_1_false

8_0_5_1_false

(The tag ids are only sequential like this because I started with a blank project and used the data simulator, don’t try and attribute any particular meaning to them.)

Combining them all together into one URL, we get this:

http://localhost:8080/api/v0/data-logging/1_0_5_1_false,2_0_5_1_false,3_0_5_1_false,4_0_5_1_false,5_0_5_1_false,6_0_5_1_false,7_0_5_1_false,8_0_5_1_false/scanned-data.csv?apiKey=NdNy2GDMVBMyWcC4JsULjpJ63sr9fYWB&humans=true

Last up, there’s a stupid bug that I’m just now noticing and appears to have been there since this feature was first introduced, but the API key query parameter is named wrong. We need to rename apiKey to api_key or the link won’t work correctly. So the URL should now look like this:

http://localhost:8080/api/v0/data-logging/1_0_5_1_false,2_0_5_1_false,3_0_5_1_false,4_0_5_1_false,5_0_5_1_false,6_0_5_1_false,7_0_5_1_false,8_0_5_1_false/scanned-data.csv?api_key=NdNy2GDMVBMyWcC4JsULjpJ63sr9fYWB&humans=true

Take that URL, paste it into a web browser or Node-RED or whatever tool you want to use the fetch the file and it should retrieve a CSV file with all of your data sets in it, like this:

timestamp,value1,value2,value3,value4,value5,value6,value7,value8

2023-12-14 14:43:50,-0.45081523060798645,0.5240156650543213,-0.46782493591308594,0.24101747572422028,-0.8926172852516174,-0.9939218163490295,-0.8517086505889893,1.0

2023-12-14 14:43:51,-0.25517576932907104,0.610255777835846,-0.46163713932037354,0.237624853849411,-0.9668946862220764,-0.9913955926895142,-0.7922044396400452,1.0

2023-12-14 14:43:52,-0.04857084900140762,0.689720630645752,-0.45543304085731506,0.23423273861408234,-0.9988197684288025,-0.9884368181228638,-0.7240755558013916,1.0

2023-12-14 14:43:53,0.16015684604644775,0.7616288065910339,-0.44920673966407776,0.23083777725696564,-0.987091600894928,-0.9850444197654724,-0.6480135917663574,0.0

2023-12-14 14:43:54,0.3618849217891693,0.825192391872406,-0.4429585337638855,0.2274399846792221,-0.9322227835655212,-0.981220006942749,-0.5648517608642578,0.0