Hello all… My company has been using Opto products for a very long time, and we’re in the process of updating old LCM-4 controllers to Groov EPIC controllers. One thing I’m struggling with is persistent variables.

On the LCM4 controllers, using OptoControl, persistent variables didn’t exist. We use recipe files, configured for upload and download in OptoDisplay, to save variable values across downloads. There are benefits and drawbacks to this method. The biggest benefit is that the recipe file can retain variable values in situations where PAC Control loses persistent variable values: clearing controller RAM, a failed/cancelled download, changing table lengths, etc. The drawbacks, or really the one huge drawback, is that it relies on OptoDisplay Runtime. OptoDisplay Runtime is incredibly slow to process recipe files, regularly corrupts the files, and at times crashes and therefore doesn’t save or restore data at all.

I like the concept of persistent variables in PAC Control, but there are drawbacks, some of which I already mentioned. If I change a table length, the persistent data is gone. Some of the things that cause persistent data to be lost should be quite rare, but other events (like changing table lengths, downloads being cancelled or failing) are not so rare, and I don’t want to lose data in those cases. Perhaps there’s an argument to be made that my programming needs to be better so that those things don’t happen, but that’s another discussion.

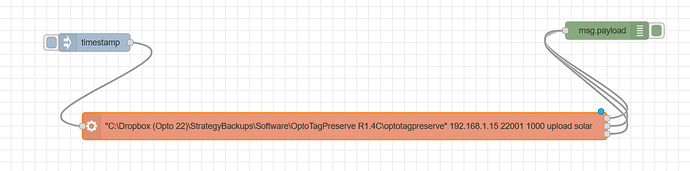

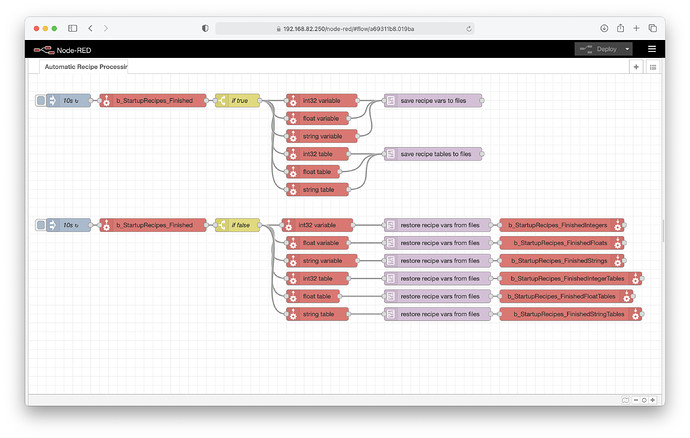

My production people are on my case about developing a strategy to make sure that persistent data is as foolproof as possible, which is why I’m here. For those of you who have been using PAC Control longer and have had access to better tools with Groov EPIC controllers longer (Node RED, MQTT, etc.), does anybody have a good strategy they could share with me?

One other reason I’m interested in this topic is because we’re considering other HMI options (Groov View), so I’d really love to not depend on PAC Display if possible for recipe data.