Can anyone give me an estimate on the amount of space the new interactive trend takes on groov? Like sizewise. I assume the information is going to be kept on the groov box so I would like to know how much space this takes and how to purge the graph eventually if possible.

Ok, this is a bit complicated, not set in stone, subject to change, etc.

We store both the raw values for your trend and a set of averaged values to let us display things quickly. We try and keep it to around 700 points per series/data point on the trend displayed at a time to keep things snappy.

The raw data set takes up 16 bytes per sampled value: 8 bytes of timestamp (in milliseconds since 1970, e.g. a Java or JavaScript timestamp) and 8 bytes of data (a 64-bit floating point value). For for a 31 day trend sampled once a second, that gives you 2,768,400 samples (60 seconds * 60 minutes * 24 hours * 31 days) stored in about 43MiB (42,854,400 bytes).

The averaged data sets take up 32 bytes per sample: 8 bytes of timestamp, 8 byte averaged value, and the minimum and maximum values seen during the averaged timespan. (We don’t currently offer a way to display these, unfortunately.) They’re built on a set of pre-determined, regular intervals, stuff like every 2 seconds, 5s, 10s, 15s, 30s, 1 minute, 5m, etc. The set chosen depends on how long your chart is and what refresh rate you’ve chosen, but in the worst case they’ll double the storage size of your trend.

We have tools in the pipeline planned to help you see how much space is being used and manage it (and probably configured data logging that isn’t tied to a chart), but they aren’t ready yet.

Thank you. This is very important information to us.

Also thanks for your prompt reply.

Some ideas to optimize storage space:

Since the samples are at regular intervals, the time stamp only needs to be listed in the header. Then you seek to the offset for the sample that needs returned and calculate the time stamp.

If you store the delta between samples instead of the sample itself, the dataset becomes very compressible for common datasets, where values don’t change very much. The actual value could be stored every so many samples to lower the burden on the CPU in calculating the values.

Compress the data using something like lz4 before storing. Often, this is a win-win as it reduces the storage space and speed up the data transfer from persistent storage faster than the increase in CPU time.

Since the samples are at regular intervals, the time stamp only needs to be listed in the header. Then you seek to the offset for the sample that needs returned and calculate the time stamp.

Ideally yeah, but we have to account for possible gaps in the data too. Like, someone tripped over the power cord or something and we go for a few minutes without getting anything back.

If you store the delta between samples instead of the sample itself, the dataset becomes very compressible for common datasets, where values don’t change very much. The actual value could be stored every so many samples to lower the burden on the CPU in calculating the values.

Compress the data using something like lz4 before storing. Often, this is a win-win as it reduces the storage space and speed up the data transfer from persistent storage faster than the increase in CPU time.

That might help. We’ve kept everything pretty straightforward for now just to make sure we can find the data set/timestamp we need quickly. Trends in 3.3 (when we introduced this storage backend, but before interactive trends shipped) should be noticeably faster to load than they were in earlier versions.

You could also store [Time difference]/Interval - This will produce easily compressible output and you can still account for jitter.

Good read here that talks about Facebook’s TSDB “Gorilla”: Gorilla: A fast, scalable, in-memory time series database | the morning paper

Jonathan,

I understand what you are saying about averaged data sets to keep the trend displays snappy. My question is about the raw data: If I download a pen to the .csv file, will it have the raw data? That is, the exact values at the prescribed interval and not the averaged values used in rendering the trends for display?

Thanks,

Arun

Hi Jonathan,

Is there any limits of interactive trends you can have on a groov server?

Mark.

Yup, downloaded data is always the raw scanned set, nothing interpolated.

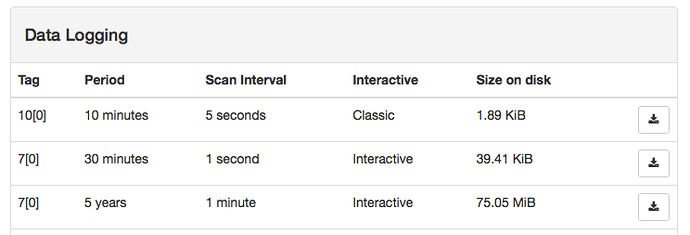

There’s a roughly 2 GiB limit to how much scanned data is being tracked that we should really be displaying in some way that people can see, but didn’t get it ready in time for the 3.4 release.

At the moment, when you hit the limit newly created trends just won’t work. I think there’s a knowledge base article pending for it, but it hasn’t been published yet. For what it’s worth, you’ll hit it if you create around 25 5 year trends with a 1 minute update interval, all pointing at different tags. (There are other combinations that you can hit it too, of course, that’s just the easiest way to do it.)

Are we also correct in assuming that the raw data ONLY gets tossed when it’s older than how long you’ve configured your trend for? In other words, if you configure your trend for 1 year, when a year is up you lose all the data that’s older than 1 year?

Yes, thats correct (I just checked a few of my new trends).

Once the total time span is full, the oldest data falls off the end (into the great bit bucket in the sky?).

So my 14 day trend only has 14 days at the sample rate and my 31 day has 31 days.

I checked these via the CSV file for each.

Your statement ‘when a year is up you lose all the data thats older than 1 year’, while correct, is a bit melodramatic.

You simply drop one bit of data off the end when the new bit gets added to the trend.

So if you have a 1 year trend at a 15 minute update interval, at 1 year and 15 minutes, one bit of data joins and one drops off.

There is no ‘lose all the data’.

tl;dr Its a fifo. First in, first out at the update interval.

Good to hear! Thanks for clarifying.

The data buffers are implemented as a circular buffer, so it’s possible to end up with data older than the chart’s period in there. For example, if you configure a 14 day trend, run it for a few days, then for whatever reason groov can’t talk to the device for a day, then things recover, the oldest data will end up at 15 days for awhile until that gap gets rolled over again.

Or put another way: the buffers are a fixed size (period in minutes * 60 / sample rate in seconds), we don’t write empty values into the buffers, and we don’t explicitly expire old data: we just wait until it gets overwritten naturally.

(groov 3.2 and earlier stored data differently and did explicitly expire data.)

True/False: the only way to “clear out” that circular buffer, is to delete the trend and start over? That’s the only way I can think to do it…

Just changing the period on the trend, saving your page, and then changing it back should do it: we throw out all accumulated data on period/interval changes.

That said: that’ll only work if that same (tag, period, interval) combination isn’t in use on another trend somewhere. We do what we can to avoid storing duplicate data.

We’re working on tools to better manage that sort of thing, and give you a window into how much space is being used on disk, etc, but they aren’t ready yet.

Well that explains why my 40 trends (20 with 2 pens and 20 with 3 pens) only go to 1 year, and I can’t add additional trends (except exact copies of an existing trend). I’ve got poor Kathy trying to figure that out right now…

I need to add 20 more trends with 2 more pens because I could not put both of them in the first trend which tracks the same type temperature data. The trends only handle 4 pens, not 5.

I also need to make the trends do 5 years because there is no way to programmatically dump the trend data to a file. Btw, I would hate to be the guy who has to download the trend data “one pen at a time”…

So apparently if I want to add the additional 2 pens, I’ll need to reduce the storage value from 1 year to 6 months or something.

I also need to make the trends do 5 years because there is no way to programmatically dump the trend data to a file.

The download links for trend data are stable, and usable when you’re not logged into groov. We embed the current user’s API key in the trend download link to make them easy to automate.

My general recommendation for getting that data out is:

- Create a user for downloading the data. (It can be an Operator in 3.4, but prior to that needs to be an Editor.)

- Log into groov as that user.

- For each trend you want to get data out for, copy the download link for the trend.

- Using a cron job or something similar, download the trend data on a regular schedule for importing into whatever you like.

If you change the user’s API key you’ll need to update those copied links, but that’s just a simple find/replace. The links will look something like this:

https://my.groov.com/api/v0/data-logging/1420_0_525600_60_true/scanned-data.csv?api_key=HELLO_THIS_IS_AN_API_KEY

Btw, I would hate to be the guy who has to download the trend data “one pen at a time”…

Again, better tools for managing this stuff are on the roadmap.

So apparently if I want to add the additional 2 pens, I’ll need to reduce the storage value from 1 year to 6 months or something.

Yes, probably.

Jonathan,

Any thoughts on adding the ability for groov trends to send a copy of data to an external metric database (graphite, influxdb, etc.)? This would be a good way to provide a long term storage backup and the protocols are pretty simple. I currently have charts that do this in my strategies.